Publications

We make an effort to keep this list up to date; however, for the most reliable list of very recent publications please see this Google Scholar page. Paper summaries, videos, and BibTeX code are available under "Details and BibTeX". Skip to Posters, Abstracts, and Short Papers.

Conference and Journal Publications By Year

2024-Present

- J. Huang, I. Sheidlower, R. M. Aronson, E. S. Short. "On the Effect of Robot Errors on Human Teaching Dynamics". International Conference on Human-Agent Interaction (HAI), Swansea, UK, November 2024. [PDF]

Details and BibTeX

This paper presents a user study to investigate how the presence and severity of robot errors affect three dimensions of human teaching dynamics: feedback granularity, feedback richness, and teaching time, in both forced-choice and open-ended teaching contexts. We show that people tend to spend more time teaching robots with errors, provide more detailed feedback over specific segments of a robot’s trajectory, and that robot errors can influence a teacher’s choice of feedback modality. This work advances our understanding of the complex relationship between robot behavior and human teaching, an essential topic for designing effective interfaces for robot learning from humans.

@inproceedings{huang2024hai, author = {Huang, Jindan and Sheidlower, Isaac and Aronson, Reuben M and Short, Elaine Schaertl}, title = {On the Effect of Robot Errors on Human Teaching Dynamics}, year = {2024}, isbn = {9798400711787}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3687272.3688320}, doi = {10.1145/3687272.3688320}, booktitle = {Proceedings of the 12th International Conference on Human-Agent Interaction}, pages = {150–159}, numpages = {10}, keywords = {human-in-the-loop learning, reinforcement learning from human feedback, robot errors}, location = {Swansea, United Kingdom}, series = {HAI '24} }- J. Staley, S. Goel, Y. Shukla, E. Short. "Agent-Centric Human Demonstrations Train World Models". Reinforcement Learning Conference (RLC), Amherst, August 2024. [PDF]

Details and BibTeX

This paper demonstrates that a model-based reinforcement learning (MRBL) can efficiently integrate information from human demonstrations. The demonsrations are naively injected into the MBRL system's replay buffer and reduce learning time by an order of magnitude while also improving learning consistency. This paper advances our understanding of how to use real human demonstrations in learning from demonstration with reinforcement learning.

@article{staley2024agent, title={Agent-Centric Human Demonstrations Train World Models}, author={Staley, James and Short, Elaine and Goel, Shivam and Shukla, Yash}, journal={Reinforcement Learning Journal}, volume={4}, pages={1873--1886}, year={2024}}- H. Owens, R. M. Aronson, E. Short. "The Limits of Robot Moderators: Evidence Against Robot Personalization and Participation Equalization in a Building Task". IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Pasadena, August 2024. [PDF]

Details and BibTeX

This paper explores robot moderation of a tower building task, investigating the use a multi-armed bandit to choose preferred methods of interaction and well as the frequency of how often someone is chosen. This paper adds nuance to our understanding of robots in groups, showing that although the groups notice the robot, not all robot behavior has meaningful effects on group behavior.

@inproceedings{10731239, author={Owens, Hayley and Aronson, Reuben M. and Short, Elaine Schaertl}, booktitle={2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN)}, title={The Limits of Robot Moderators: Evidence Against Robot Personalization and Participation Equalization in a Building Task}, year={2024}, volume={}, number={}, pages={1463-1470}, keywords={Correlation;Statistical analysis;Atmospheric measurements;Buildings;Focusing;Particle measurements;User experience;Behavioral sciences;Bayes methods;Robots}, doi={10.1109/RO-MAN60168.2024.10731239}}- H. Yu, Q. Fang, S. Fang, R. M. Aronson, E. Short. "How Much Progress Did I Make? An Unexplored Human Feedback Signal for Teaching Robots". IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Pasadena, August 2024. [PDF]

Details and BibTeX

This work explores progress feedback, a teaching signal indicating task completion percentage, as a way to enhance how humans teach robots. Through two online studies and a public-space deployment with a total of 116 participants, the authors show that progress feedback effectively communicates task success, completion level, and unproductive behavior, while remaining consistent across users and not increasing workload. We also contribute a dataset of 40 real-world, non-expert demonstrations that reveal natural teaching behavior, including multi-policy and exploratory actions.This paper introduces a new potential signal for human teaching of robots, opening directions for other future work on novel teaching signals.

@inproceedings{yu2024much, title={How Much Progress Did I Make? An Unexplored Human Feedback Signal for Teaching Robots}, author={Yu, Hang and Fang, Qidi and Fang, Shijie and Aronson, Reuben M and Short, Elaine Schaertl}, booktitle={2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN)}, pages={1739--1746}, year={2024}, organization={IEEE}}- I. Sheidlower, E. Bethel, D. Lilly, R. M. Aronson, E. Short. "Imagining In-distribution States: How Predictable Robot Behavior Can Enable User Control Over Learned Policies". IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Pasadena, August 2024. [PDF]

Details and BibTeX

This work introduces Partitioned Control (PC), where users and a reinforcement learning (RL) policy share control of a robot, allowing for flexible and creative task collaboration. However, standard RL policies may react poorly to user inputs that appear out-of-distribution. To address this, the authors propose IODA (Imaginary Out-of-Distribution Actions), an algorithm that helps robots align with user expectations even in novel scenarios. A user study shows that IODA improves task success and robot-user alignment, emphasizing the importance of designing RL systems that adapt to human input during shared control. This work introduces a new paradigm for interaction between users and RL-trained robots that empowers users to use the robto to do new and unexpected things.

@inproceedings{sheidlower2024imagining, title={Imagining In-distribution States: How Predictable Robot Behavior Can Enable User Control Over Learned Policies}, author={Sheidlower, Isaac and Bethel, Emma and Lilly, Douglas and Aronson, Reuben M and Short, Elaine Schaertl}, booktitle={2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN)}, pages={1308--1315}, year={2024}, organization={IEEE}}- R. M. Aronson, E. Short. "Intentional User Adaptation to Shared Control Assistance". ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boulder, March 2024. [PDF]

Details and BibTeX

Shared control systems often assume users behave consistently regardless of assistance, but this study shows otherwise: users adapt their control strategies in response to assistive robot behavior. In both a controlled bubble-popping task and an in-the-wild robot cup-picking task, participants altered their actions to counteract or work with the assistance. This work shows that there is a strong need for assistive systems that account for user adaptation and treat users as intentional, dynamic agents in the interaction.

@inproceedings{aronson2024intentional, title={Intentional user adaptation to shared control assistance}, author={Aronson, Reuben M and Short, Elaine Schaertl}, booktitle={Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction}, pages={4--12}, year={2024}}- J. Huang, R. M. Aronson, E. Short. "Modeling Variation in Human Feedback with User Inputs: An Exploratory Methodology". ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boulder, March 2024. [PDF]

Details and BibTeX

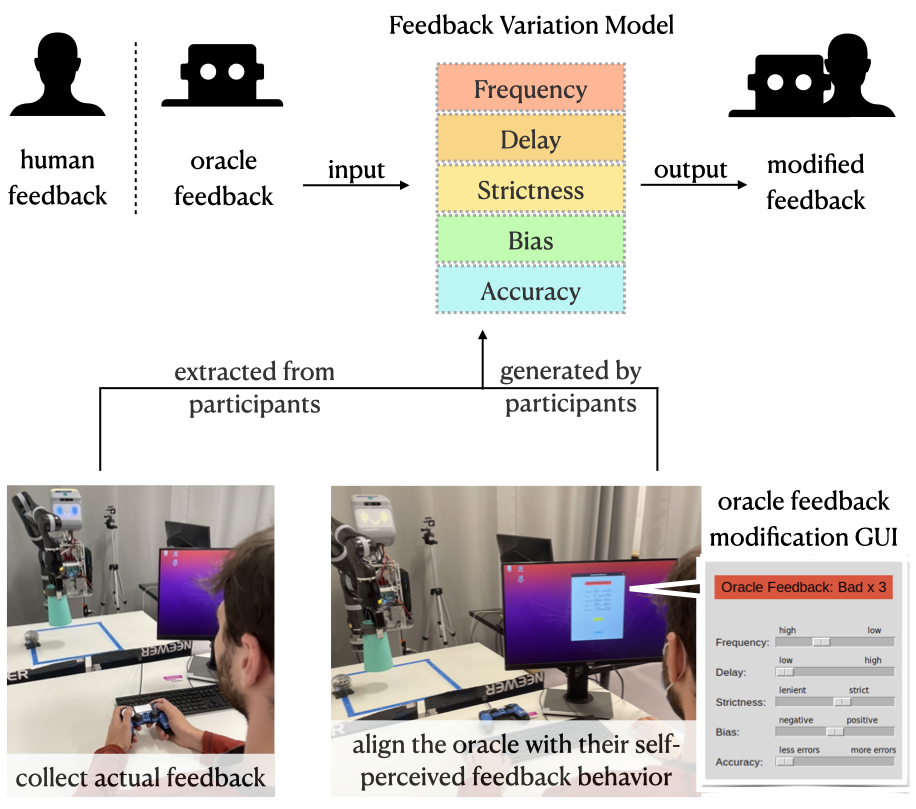

Interactive reinforcement learning (IntRL) often relies on perfect oracles that provide idealized, error-free feedback, but this fails to reflect the variability of real human input. To address this, we propose a feedback variation model based on five dimensions from prior research, enabling the transformation of oracle feedback into more human-like responses. Simulation results show that these variations can significantly affect learning performance. A proof-of-concept study further demonstrates how the model can be grounded in real user data, capturing diverse feedback patterns and highlighting the gap between human and oracle behavior. This approach offers a more realistic foundation for developing and evaluating IntRL systems, and highlights some problems of using pre-trained models in place of real human feedback when testing IntRL algorithms.

@inproceedings{huang2024modeling, title={Modeling variation in human feedback with user inputs: An exploratory methodology}, author={Huang, Jindan and Aronson, Reuben M and Short, Elaine Schaertl}, booktitle={Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction}, pages={303--312}, year={2024}}- I. Sheidlower, M. Murdock, E. Bethel, R. M. Aronson, E. Short. "Online Behavior Modification for Expressive User Control of RL-Trained Robots". ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boulder, March 2024. [PDF]

Details and BibTeX

Reinforcement Learning (RL) allows robots to learn tasks autonomously, but offers limited user control after deployment. To address this, we propose online behavior modification, enabling real-time user adjustments to robot behavior during task execution. We introduce ACORD, a behavior-diversity–based algorithm, and demonstrate its effectiveness through simulation and a user study. Results show that ACORD supports user-preferred control and expressiveness, comparable to Shared Autonomy, while retaining the autonomy and robustness of RL. This work provides a best-of-both-worlds way to ensure "good" robot behavior while still giving users control over the system.

@inproceedings{sheidlower2024online, title={Online Behavior Modification for Expressive User Control of RL-Trained Robots}, author={Sheidlower, Isaac and Murdock, Mavis and Bethel, Emma and Aronson, Reuben M and Short, Elaine Schaertl}, booktitle={Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction}, pages={639--648}, year={2024}}2023

- K. H. Allen, A. K. Balaska, R. M. Aronson, C. Rogers, E. Short. "Barriers and Benefits: The Path to Accessible Makerspaces". International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS), New York, October 2023. [PDF]

Details and BibTeX

Motivated by the philosophical overlap between makerspace culture and the needs of assistive technology users, we investigated the ways that makerspaces can support the development of new technologies by and for disabled makers. Using semi-structured interviews, we identified five categories of barriers to makerspace participation and highlight ways makerspaces can better welcome makers with disabilities. This work advances our understanding of how disabled people approach making and the disconnects between the people who run makerspaces and the needs of disabled users of those spaces.

@inproceedings{allen2023barriers, title={Barriers and benefits: the path to accessible makerspaces}, author={Allen, Katherine H and Balaska, Audrey K and Aronson, Reuben M and Rogers, Chris and Short, Elaine Schaertl}, booktitle={Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility}, pages={1--14}, year={2023}}- H. Yu, R. M. Aronson, K. H. Allen, E. Short. "From "Thumbs Up" to "10 out of 10": Reconsidering Scalar Feedback in Interactive Reinforcement Learning". International Conference on Intelligent Robots and Systems (IROS), Detroit, October 2023. [PDF]

Details and BibTeX

Learning from human feedback helps robots improve in exploration-heavy tasks, but scalar feedback is often overlooked due to concerns about its noise and instability. This study compares scalar and binary feedback, finding that while scalar feedback is initially less consistent, the difference disappears with minor tolerance for variation. Both feedback types show similar correlations with RL targets. To enhance scalar feedback, the authors propose STEADY, a method that models feedback as multi-distributional and re-scales it using statistical patterns. Experiments show that scalar feedback combined with STEADY outperforms other approaches. This work presents a novel way of using scalar feedback and shows that clever design of interactions can support effective learning even when real user behavior does not match algorithmic assumptions.

@inproceedings{yu2023thumbs, title={From “Thumbs Up” to “10 out of 10”: Reconsidering Scalar Feedback in Interactive Reinforcement Learning}, author={Yu, Hang and Aronson, Reuben M and Allen, Katherine H and Short, Elaine Schaertl}, booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages={4121--4128}, year={2023}, organization={IEEE}}2021-2022

- I. Sheidlower, A. Moore, E. Short. "Keeping Humans in the Loop: Teaching via Feedback in Continuous Action Space Environments". International Conference on Intelligent Robots and Systems (IROS), Kyoto, October 2022. [PDF]

Details and BibTeX

Interactive Reinforcement Learning (IntRL) has traditionally focused on slow, discrete-action tasks, limiting its use in complex robotics. To address this, the authors introduce CAIR, the first IntRL algorithm for continuous action spaces. CAIR blends environment- and teacher-learned policies by weighting them based on their agreement, enabling robust learning even with noisy human feedback. In both simulation and a human user study, CAIR outperforms previous state-of-the-art IntRL methods, demonstrating its effectiveness for more challenging robotic tasks. This paper mixes at-the-time state-of-the-art learning with human-in-the-loop methods, and enabled policy shaping to be used with more modern learning algorithms than previously possible.

@inproceedings{sheidlower2022keeping, title={Keeping humans in the loop: Teaching via feedback in continuous action space environments}, author={Sheidlower, Isaac and Moore, Allison and Short, Elaine}, booktitle={2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages={863--870}, year={2022}, organization={IEEE}}- S.Y. Lo, E. Short, A. Thomaz. "Robust Planning with Emergent Human-Like Behavior for Agents Traveling in Groups". International Conference on Robotics and Automation (ICRA), Online, July 2021. [PDF]

2020 and earlier

- M. L. Chang, T. K. Faulkner, T. B. Wei, E. S. Short, G. Anandaraman, A. L. Thomaz. "TASC: Teammate Algorithm for Shared Cooperation". IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Online, October 2020. [PDF]

- A. D. Allevato, E. S. Short, M. Pryor, A. L. Thomaz. "Iterative residual tuning for system identification and sim-to-real robot learning". Autonomous Robots, Volumn 44, Issue 7, Pages 1167-1182, September 2020. [PDF]

- M. L. Chang, Z. Pope, E. S. Short, A. L. Thomaz. "Defining Fairness in Human-Robot Teams". IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Online, 2020. [PDF]https://drive.google.com/file/u/2/d/1fLxE0to5jpXtH3iYUZja5vvu6FLTZADx/view

- T. Kessler Faulkner, E. Short, A. Thomaz. "Interactive Reinforcement Learning with Inaccurate Feedback". International Conference on Robotics and Automation (ICRA), Online, May 2020. [PDF]

- S.Y. Lo, E. Short, A. Thomaz. "Planning with Partner Uncertainty Modeling for Efficient Information Revealing in Teamwork". ACM/IEEE Conference on Human-Robot Interaction (HRI), Online, March 2020. [PDF]

- A. Allevato, E. Short, M. Pryor, A. Thomaz. "Learning Labeled Robot Affordance Models by using Simulations and Crowdsourcing". Robotics: Science and Systems (RSS), Online, July 2020. [PDF]

- C. Bethel, M. Bruijnes, M. Jung, C. Mavrogiannis,S. Parsons, C. Pelachaud, R. Prada,L. Riek, S. Strohkorb Sebo, J. Shah, E. Short, M. Vazquez. "Working Group on Social Cognition for Robots and Virtual Agents". Dagstuhl Reports, Volume 9, Issue 10, Seminar on Social Agents for Teamwork and Group Interactions, October 2019. [PDF]

- A. Allevato, E. Short, M. Pryor, and A. L. Thomaz. “TuneNet: One-Shot Residual Tuning for System Identification and Sim-to-Real Robot Task Transfer”. Conference on Robot Learning (CORL), Osaka, Japan, 2019. [PDF]

- A. Saran, E. Short, A. L. Thomaz, and S. Niekum. “Understanding Teacher Gaze Patterns for Robot Learning”. Conference on Robot Learning (CORL) , Osaka, Japan, 2019. [PDF]

- T. Fitzgerald, E. Short, A. Goel, and A. L. Thomaz. “Human-guided Trajectory Adaptation for Tool Transfer”. International Conference on Autonomous Agents and Multiagent Systems (AAMAS) , Montreal, Canada, 2019. [PDF]

- T. Kessler Faulkner, R. A. Gutierrez, E. Short, G. Hoffman, and A. L. Thomaz. “Active Attention-Modified Policy Shaping”. International Conference on Autonomous Agents and Multiagent Systems (AAMAS) , Montreal, Canada, 2019. [PDF]

- E. Short, A. Allevato, and A. L. Thomaz. “SAIL: Simulation-Informed Active In-the-Wild Learning”. ACM/IEEE Conference on Human-Robot Interaction (HRI) , Daegu, South Korea, 2019. [PDF]

- T. Kessler Faulkner, E. Short, and A. L. Thomaz. “Policy Shaping with Supervisory Attention Driven Exploration”. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) , Madrid, Spain, 2018. [PDF]

- A. Saran, S. Majumdar, E. Short, A. L. Thomaz, and S. Niekum. “Human Gaze Following for Human-Robot Interaction”. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) , Madrid, Spain, 2018. [PDF]

- M. L. Chang, R. A. Gutierrez, P. Khante, E. Short, and A. L. Thomaz. “Effects of Integrated Intent Recognition and Communication on Human-Robot Collaboration”. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) , Madrid, Spain, 2018. [PDF]

- E. Short, M. L. Chang, and A. L. Thomaz. “Detecting Contingency for HRI in Open-World Environments”. ACM/IEEE Conference on Human-Robot Interaction (HRI) , Chicago, USA, 2018. [PDF]

- E. Short, E. C. Deng, D. Feil-Seifer and M. J. Matarić. “Understanding Agency in Interactions Between Children With Autism and Socially Assistive Robots”. Journal of Human-Robot Interaction (JHRI), vol. 6, no. 3, p. 21, 2017. [PDF]

- E. Short and M. J. Matarić. “Robot Moderation of a Collaborative Game: Towards Socially Assistive Robotics in Group Interactions”. IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) , Lisbon, Portugal, 2017. [PDF]

- E. Short, K. Swift-Spong, S. Hyunju, K. M. Wisniewski, Z. Deanah Kim, W. Shinyi, E. Zelinski, and M. J. Matarić. “Understanding Social Interactions with Socially Assistive Robotics in Intergenerational Family Groups”. IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) , Lisbon, Portugal, 2017. [PDF]

- K. Swift-Spong, E. Short, E. Wade, and M. J. Matarić. “Effects of Comparative Feedback from a Socially Assistive Robot on Self-Efficacy in Post-Stroke Rehabilitation”. IEEE International Conference on Rehabilitation Robotics , Singapore, 2015. [PDF]

- E. Short, K. Swift-Spong, J. Greczek, A. Ramachandran, A. Litoiu, E. C. Grigore, D. Feil-Seifer, S. Shuster, J. J. Lee, S. Huang, S. Levonisova, S. Litz, J. Li, G. Ragusa, D. Spruijt-Metz, M. J. Matarić, and B. Scassellati. “How to Train Your DragonBot: Socially Assistive Robots for Teaching Children about Nutrition through Play”. IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) , Edinburgh, Scotland, 2014. [PDF]

- E. Short, J. Hart, M. Vu, and B. Scassellati. “No fair!! An Interaction with a Cheating Robot”. ACM/IEEE Conference on Human-Robot Interaction (HRI) , Osaka, Japan, 2010. [PDF]

- J. Kast, J. Neuhaus, F. Nickel, H. Kenngott, M. Engel, E. Short, M. Reiter, H.-P. Meinzer, and L. Maier-Hein. “Der Telemanipulator daVinci als mechanisches Trackingsystem: Bestimmung von Präzision und Genauigkeit”. Bild. für die Medizin: Algorithmen-Systeme-Anwendungen , Heidelberg, Germany, 2009. [PDF]

Short Conference Papers, Workshop Papers, White Papers, and Poster Abstracts By Year

2021-present

- R. M. Aronson, E. S. Short. "Control-Theoretic Analysis of Shared Control Systems". RO-MAN Workshop on Variable Autonomy for Human-Robot Teaming, Pasadena, 2024. [PDF]

- I. Sheidlower, R. M. Aronson, E.S. Short. "Towards Interpretable Foundation Models of Robot Behavior: A Task Specific Policy Generation Approach". RLC Workshop on Training Agents with Foundation Models, Amherst, 2024. [PDF]

- K. Allen, R. Aronson, T. Bhattacharjee, F. Broz, M. L. Chang, M. Collier, T. Kessler Faulkner, H. R. Lee, I. Neto, K. Winkle, and E. S. Short. "Assistive Applications, Accessibility, and Disability Ethics in HRI". Workshop Proposal for the ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boulder, CO, March 2024. [PDF]

- I. Sheidlower, R. M. Aronson, E.S. Short. "Modifying RL Policies with Imagined Actions: How Predictable Policies can Enable Users to Perform Novel Tasks". AAAI Fall Symposium 2023, AI-HRI, Arlington, 2023.

- I. Sheidlower, E. S. Short, A, Moore. "Environment Guided Interactive Reinforcement Learning: Learning from Binary Feedback in High-Dimensional Robot Task Environments". International Conference on Autonomous Agents and Multiagent Systems (AAMAS), Online, May 2022.

- MMA de Graaf, G. Perugia, E. Fosch-Villaronga, A. Lim, F. Broz, E.S. Short. "Inclusive HRI: Equity and diversity in design, application, methods, and community". Workshop proposal at the ACM/IEEE Conference on Human-Robot Interaction (HRI), Online, March 2022. [PDF]

- J. Huang, E. S. Short. "Modeling Human Feedback Behavior for Interactive Reinforcement Learning". HRI Workshop on Modeling Human Behavior in Human-Robot Interactions, Online, 2022.

- H. Yu, E. S. Short. "Active Feedback Learning with Rich Feedback". ACM/IEEE Conference on Human-Robot Interaction Late-breaking Report (HRI-LBR), Online, 2021.

- I. S. Sheidlower, E. S. Short. "When Oracles Go Wrong: Using Preferences as a Means to Explore". ACM/IEEE Conference on Human-Robot Interaction Late-breaking Report (HRI-LBR), Online, 2021.

- J. Staley, E. S. Short. "Contingency Detection in Multi-Agent Interactions". ACM/IEEE Conference on Human-Robot Interaction Late-breaking Report (HRI-LBR), Online, 2021.

- A. Cleaver, D. V. Tang, V. Chen, E. S. Short, J. Sinapov. "Dynamic Path Visualization for Human-Robot Collaboration". ACM/IEEE Conference on Human-Robot Interaction Late-breaking Report (HRI-LBR), Online, 2021.

- J. Huang, E. S. Short. "Building a Better Oracle: Using Personas to Create More Human-Like Oracles". HRI Workshop on Research Through Design Approaches in Human-Robot Interaction (RtD-HRI), Online, 2021.

2019-2020

- S.-Y. Lo, E. S. Short, A. L. Thomaz. "Robust Following with Hidden Information in Travel Partners". Extended Abstract on International Conference on Autonomous Agents and Multiagent Systems (AMMAS), Online, 2020.

- A. Cleaver, E. Short, J. Sinapov. "RAIN: A Vision Calibration Tool using Augmented Reality". Workshop on Virtual, Augmented, and Mixed Reality for Human-Robot Interaction (VAM-HRI), Online, 2020.

2018 and earlier

Please visit Dr. Short's Google Scholar page for a list of pre-2019 workshop papers.

- H. Yu, R. M. Aronson, K. H. Allen, E. Short. "From "Thumbs Up" to "10 out of 10": Reconsidering Scalar Feedback in Interactive Reinforcement Learning". International Conference on Intelligent Robots and Systems (IROS), Detroit, October 2023. [PDF]

- J. Staley, S. Goel, Y. Shukla, E. Short. "Agent-Centric Human Demonstrations Train World Models". Reinforcement Learning Conference (RLC), Amherst, August 2024. [PDF]